There is definitely a difference...

ACAP - All Capitalists Are Bastards. (see * below)

Just take a look - and yes, I'm aware this news site is left-leaning, that's why I read it every day:

- USA Republican story.

- Australia Labor story.

Those two stories are co-contemporary on The New Daily today and they tell the whole story. All there is to know in this world. The alpha and the omega. About the state of our planet.

1: Using the debt ceiling to wrangle changes in policy. What changes? Oh, just the usual, see how many people we can put into deeper poverty or kill off. Our wealthy buddies need the space.

2: Fixing trade relationships, making the medical and benefits systems more fair and raising more people out of life-threatening poverty. Speed up adoption of renewable/clean energy.

There endeth the lesson.

Why Did I Post This?

These first two stories were in my TND (The New Daily) newsletter email this morning. Yep, I get daily newsletters from a few dozen news sources every day and Read them for things to comment on or act on. I find it pays me to Know stuff, just like Tyrion. I highly suggest you Read things and Know things too, but I'm not suggesting you should receive 40 - 80 articles a day and Read A Selected 10 - 15 Of Them. My email newsletter ("Subscribe" link in the banner above, hint hint...) only comes out once a week on Fridays and contains between 1 and 6 articles per issue.

But two stories in the same issue and showing the diametrically opposite approaches of the right and left, that was an irony I couldn't resist. As I mentioned in a previous article, there are many approaches to government.

The Right are inevitably paranoid, greedy, cruel, and authoritarian; and they brook no discussion. "Either give it all to us, be obedient to us, be like us - or we'll kill you, one way or another." And the circle that constitutes "us" in that particular equation always gets smaller and more selective, until there's only one person left standing.

Those on the Left tend to be far more egalitarian, far less assertive, and recognise a greater percentage of their fellow beings as "humans like ourselves." But they too are prone to Otherism, cruelty, and - the scourge of the planet - capitalistism.

Look at the state of the planet: 1% own most of the wealth, the richest 10% - including that 1% - own 80% of the planet's wealth, and the poorest people comprise something like 40% of the population and share in 20% of the wealth. No matter which "wing" of government you feel kinship with, they all allow this inequality to stand in the overall picture. It's just a small shift in how many citizens that government is willing to kill.

Governments get too used to "running things around here." Don't forget that governments came out of kingdoms and fiefdoms and before that village councils and before that small groups - all usually headed by the most sociopathic alpha in the group.

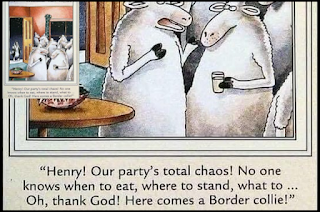

But is the time for authoritarian protectionist elitist capitalism over now? Because now the gloves are pretty much going to be coming off, because of the promise of AI and robots and automation (Robots are coming - have a read) it would appear that there'll no longer be a need for 90% of the world's population. And both sides seem okay with that and it's only the actual percentages they differ on. Maybe what we need is a smarter flock.

The linked article is about a company called Sanctuary AI who are produicng a 5'7" robot called Phoenix. Well, they're releasing a few in their immediate vicinity, at any rate. To local businesses. At hourly rental. And the video of a rather jerky wobbly bot doesn't inspire me to hire one for probably more than the hourly rate of a casual worker, no, not one bit.

BTW: That article clearly demonstrates one other thing about our human condition - we've turned cognitive dissonance and fantasizing into an artform. The lede line demonstrates this quite clearly:

"The AI-powered humanoid could be your next coworker." -- https://www.freethink.com/robots-ai/general-purpose-robot

Except.

No.

I mean, really? Many people won't work alongside people who have a different skin colour, gender, or ability. The robot(s?) in the video definitely show mechanical instabilities that lead to inaccuracies that will slow them down, meaning that working alongside them could become frustrating really quickly.

Let's Examine That

Most mobile humanoid robots aren't really ready for prime time yet. The Atlas parkour robots and Spot dogs of the robot world are very expensive machines that were especially trained for agility and mobility and even they muff their actions regularly. A less costly robot like Phoenix won't be able to be built with all the expensive hardware, so they'll be inherently mechanically handicapped.

Why are most robots put behind service desks or in "no humans" areas? Why aren't there more robot nannies spoon-feeding infants or elderly? Because they still regularly get out of registration with their surroundings and repeatedly damage the dolls they train on.

The best affordable robots are plagued with problems, they wobble, oscillate, can't repeat an action within close enough tolerances, and are slow. Humans "co-working" with them will hate them within the first few hours.

Another form of robot are those swarm drones and guided missiles. Go look up the accuracy statistics on those, it's a bit scary actually. And yes, I'm aware that the skywriting drones that are seemingly performing rock-solid shows but you have to understand that they were built, equipped, and programmed quite painstakingly for just one thing, and that's to do those shows.

I'll mention the term "brittle AI" - this is a fairly simple form of AI which is just tasked with one job. It's called brittle because any other task will probably break it. Ask a kitchen pasta-boiling bot, consisting of usually just a pair of arms on an overhead track (with a really tiny set of instructions consisting of "take {object}, place in boiling water, wait X minutes for al dente, Y minutes for well cooked, then remove and plate up"), to babysit and you'll only have yourself to blame for the consequences.

So those show drones are a brittle combination of programming and hardware that are just good for those specific tasks. Imagine buying 1,200 cheap drones and Hue lights and trying to produce the same show.

Or take autonomous delivery drones. They're designed & built to traverse well-known sets of points along well-known routes and pay attention to their surroundings. And yet there are people making a living out of compiling delivery drone fails on Youtube...

I don't make or program robots but I think if I was - oh, say ... - an AI firm making robots, I'd work on giving the robots some really excellent but brittle AI as a base layer of programming.

This base AI's task will just be to learn how the damn body moves in the real world. "Oh - when I move the arm like this with a kilo of weight in the hand, my torso gives way ever so slightly and then I look like a drunken racoon stealing my second full bottle of vodka, maybe I can pre-emptively correct for this in future?" and that will be all that this AI program does. Know to within a millimetre how to recover if some asshole human co-worker bumps the robot for a joke, where that bottle finishes up if the robot stumbles - is it still upright? - and so forth.

THEN I'd have a second AI program that just gets told what to put where and with what and when and whom to, and asks the basic movement AI to "make it so."

And THEN I'd have a third level of AI that interacts with people and the surroundings and adjusts the task queue for 2nd level, which in turn tells the base level what to do.

I mean, if you're talking to someone and they say "Hand me that bottle of vodka wouldja" you don't plan the whole action in your conscious brain, do you? No, you look for a bottle, grab it, and hand it to Person B. We have all those different subsystems. So should our machines. Not just one system paddling like hell to do all of everything, every time.

30 years ago there was already a six legged "ant" that taught itself to walk in a desired direction. It basically had controllers for each leg that knew nothing, but got rewarded by the direction circuit for every millimetre Attila the Ant moved in the right direction, and it learned quite quickly. But that was all it could do.

And I know that "cobots" exist that will share space with humans without harming them but they will by necessity be hampered by that limitation and most mangers will find ways to slowly take some of those limits off just to get production up. Robots cost money but can then work without pay, but humans cost a wage or salary and if an accident happens well that's just one less wage to make up next month.

Also - the best robots are the industrial arm bots. They're fixed to a certain area, it's easy to get completely repeatable results out of them, and since they have the power to both accelerate AND stop quickly, so they can be made fairly safe around humans. But they're not really intelligent.

You can have it relatively dumb, strong, and hard-working - or smart, a bit unstable, and slow. These limits aren't going to go away too quickly.

So yeah - we take the Polyanna attitude that Phoenix will really quickly become a miracle of precision engineering, and we'll somehow educate people not to mess with the new robot worker just for the hell of it or get offended that it's a bit slow at the job thus slowing the human worker down, and also we have this perfect environment where the robot you work with does everything in sync with you and things will get along swimmingly. It'll happen, it just hasn't happened yet.

Dun-dun-dun-dun! Elephant in the room alert!

And we also ignore one other "cognitive dissonance elephant" in the room: that if the robot is so good that it's acceptable to the humans then . . well . . . remember the ACAPs?

This perfect robot arrives and is booted up and has shown that it can work alongside you perfectly. Q: How long after this point do you think it will be before Management just quietly hire another Phoenix and give you a Pink Slip?

Upper Level Management

Then there's also that top level AI. The lower level AI are quite focused on specific tasks. Like our autonomous nervous system just does basic things like breathing and regulating hormones and heart rate to match activity; and then our unconscious functions just know that when your top level AI says "I want to walk to - there." it makes the legs and feet walk you there, there's that top level that even in we humans isn't always perfect.

You know, the "hold my beer!" kinds of actions that we later look back on (if we survive...) with a mixture of "wow!" and regret. And we think an AI will be able to manage that better than we can. Uhhm... Nuh-uh... Our best two shots at higher-level AI at present are brittle machine learning and Generative Predictive (or Pre-Trained) Transformers or GPT. If you just read that link in this paragraph, you'd know that GPT doesn't find what's right, it finds what looks like it should go next to the preceding words/phrases. GPT finds absolute bullshit sometimes, that would make our most glorious Darwin Awards "hold-my-beer" moments look like amateur hour.

Give me a Phoenix robot with a GPT-style AI in it trying to figure out the most efficient way to get a bottle of vodka to me and I risk having the 'bot try to ram the vodka down my throat cork, bottle, and all because the sentence "Phoenix delivered the bottle of vodka quickly and efficiently" sounded better to the GPT than "Phoenix got a glass, set it down, filled it with a 20ml shot of vodka from the bottle in its other hand, set down the bottle, and handed the glass to Ted."

But because ACAP, they'll rush these decrepit POS robots to market as quickly as they can, and then we'll have to make the Three Laws Of Robotics, and then the "One thousand one Hundred Clauses To The Three Laws Of Robotics" to protect against the inevitable manufacturers' race to the bottom in terms of making the cheapest possible robots and sell as many as possible. Look what happened with 3D printers for FSM's sake.

We need lawmakers to come from among the companies making the robots and AIs, because the lawmakers we do have can't think past their own pants and ever-ready-for-grift-money pockets. Most are also religiously bent so to them, AI and robots sounds like something wanting to be God and they can't have that so they make underlings do the research for them. And then ignore their advice and vote on grift lines.

Also, I can't help thinking this stuff is dumbing us down too. Because things like this. Apparently we have people aspiring to imitate GPT, which will be a pale shadow of what's itself just a pale shadow of human creativity. I'm not sure if this guy is a publicity whore or if he's more of a brilliant performance artist than a writer.

And M John Harris is apparently a good writer, so this begs the question of why he'd want to imitate a mediocre thing like GPT? Also he shows some kind of understanding deficit about GPT - it "writes" nothing itself, it scours millions of people's writing and then tries to predict what word fits the training data best, then the next word, and the word after that, and so on. And all these words were written by different people, the rule and training data sets were from writings of other individuals. GPT doesn't develop a unique writing style that MJH can imitate, it develops all the writing styles and mashes them together.

Oh look:

"Do you think GPT will ever write an original piece of writing?

As an AI language model, I don't possess personal opinions or beliefs. However, I can provide you with some insights on the topic.

The GPT models, such as GPT-3, are capable of generating text that appears to be original and creative. They can produce coherent and contextually relevant content based on the input they receive. However, it's important to note that GPT models generate text by predicting the most probable next word given the preceding context, utilizing the patterns and information it learned during training.

While GPT models can generate text that seems original, they do not possess true creativity or originality in the same way humans do. They rely on patterns and examples from the data they were trained on, rather than forming entirely new ideas or concepts. They excel at mimicking and emulating human-generated text, but their output is ultimately constrained by the data they have been exposed to during training.

That said, researchers are constantly working on advancing AI models and exploring ways to enhance their capabilities. It's possible that future iterations or new models may exhibit more originality, creativity, and the ability to generate truly unique content. However, the question of whether AI models can achieve true originality in the same way humans do is still a subject of debate and ongoing research."

In other words, to imitate GPT's "style" you have to just imitate everyone's styles and average them together. The bit I highlighted is probably the only thing GPT outputs that's distinctive - and it was probably written omne quod est by the developers...

Why Did I Really Write This? Will I Ever Shut Up?

Because I won't let the bastards get away with anything, and I will support every change that improves life for everyone. And I'll never stop until the last right wing capitalist is silent, exiled, or has passed away.

Because right wing capitalists need to be exposed, fought, lampooned, and kicked up the arse every time they stick their heads up.

Because the whole world could survive - and survive comfortably at that - if it wasn't for the wealth inequality that right wing capitalism creates.

And because when you come down to is, we all live on this planet, this one single habitat that we have, and we need to stop it being exploited for something as stupid and ultimately valueless as money.

But it needs YOU to do things too - share this article, write emails and sign petitions, scroll back up and subscribe to my newsletter and donate to allow me to increase the reach of these posts, to let me continue making these posts.

Remember that we're all Earthings on spaceship Earth.

And above all - "Thou Shalt Keep The Bastards Honest."

* No, I didn't write the acronym wrong. All Capitalists Are Pants.

No comments:

Post a Comment